3/2006

How we use the brain and mind to see and hear

by John P. Eberhard,

FAIA

Founding president, the Academy of Neuroscience for Architecture

For a moment, let’s go back to our visit to the Cathedral at Amiens as I discussed in my first article. I suggested that walking into a cathedral was more than a visual or aural experience. However, in this article, I will concentrate on just those two most important activities of our sensory system.

It may seem obvious, but it is important to point out that we do not see the awe-inspiring interior of Amiens because there is a little projector inside our head sending pictures to a screen in our brain, like a slide projector in a client presentation. The brain deals with electrical and chemical signals (more fully discussed below), and the mind assembles these signals into a visual image. The same is true of hearing music as we walk into Amiens: There is no equivalent of a tape player broadcasting sounds into the hollow places of our skull. Electrical signals are created by sound waves as they impinge on those funny-looking pieces of flesh on both sides of your head called your ears.

Action potentials

Action potentials

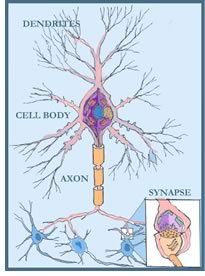

Neurons are the basic components of the brain. More than 10 billion of

them are imbedded (glued in place by some 90 billion glial cells) in

the cortex, where they provide continuous activity for the mind.

The major parts of a neuron are:

- The cell body, where important housekeeping functions occur, such as storing genetic material and making proteins and other molecules needed for the cell’s survival

- Axons and dendrites serve as output and input channels. Axons carry messages to other cells

- At the end of the axon are terminals called synapses that connect to other neurons, either via direct electrical signals across dendrites or by the release of chemicals from the storage sites of the axon’s terminals.

There is a concept in neuroscience called “action potentials” that needs to be understood before we discuss the details of vision and hearing. Every sensory experience that we have has as its goal activating one or more action potentials somewhere in the brain. It is useful to think about this activity as the creation of a single bit of information (i.e., one bit in the assembly of bits that form a visual image or the sound of an organ).

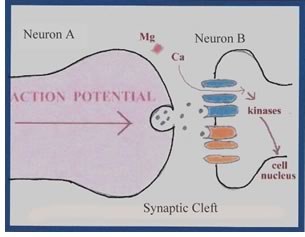

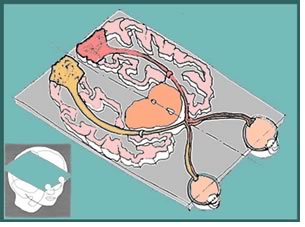

The

drawing, left, shows a cross-section through two “pods” (axon

terminals) on two different neurons. These pods are separated by a very

narrow space (called the synaptic cleft) across which chemicals (called

neurotransmitters) flow. When an electrical signal is received by the

dendrites of neuron A (in the illustration below) a chemical reaction

begins that will eventually release neurotransmitters from its pod. This

creates an “action potential”—something that is likely

to happen, but has not yet happened. At this point in the process, Neuron

A is said to have the “presynaptic terminal (or pod).” After

an actual chemical exchange, Neuron B is said to have the “postsynaptic

terminal.”

The

drawing, left, shows a cross-section through two “pods” (axon

terminals) on two different neurons. These pods are separated by a very

narrow space (called the synaptic cleft) across which chemicals (called

neurotransmitters) flow. When an electrical signal is received by the

dendrites of neuron A (in the illustration below) a chemical reaction

begins that will eventually release neurotransmitters from its pod. This

creates an “action potential”—something that is likely

to happen, but has not yet happened. At this point in the process, Neuron

A is said to have the “presynaptic terminal (or pod).” After

an actual chemical exchange, Neuron B is said to have the “postsynaptic

terminal.”

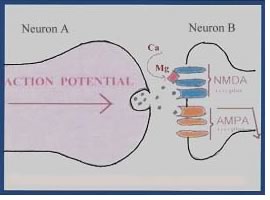

There is a very clever locking mechanism (labeled NMDA)

on the terminal of Neuron B that allows the chemicals contained in a

neurotransmitter to flow one way. However, once the neurotransmitters

have made it across the synaptic cleft, there is a reaction that releases

this locking mechanism (actually a molecule of magnesium), which allows

a chemical (calcium) to flow between A and B, and a feedback loop is

established. Once established, Neuron A can more efficiently send additional

neurotransmitters across to Neuron B, establishing what is called a “Long

Term Potential” (LTP).

This LTP binds the two neurons together for any future activities that

come along the same path. So, if a child is studying the ABCs, or a musician

is practicing on the piano, or you are learning to play tennis, the brain

can guide visual and aural experiences in a manner that produces a series

of LTPs.

There is a very clever locking mechanism (labeled NMDA)

on the terminal of Neuron B that allows the chemicals contained in a

neurotransmitter to flow one way. However, once the neurotransmitters

have made it across the synaptic cleft, there is a reaction that releases

this locking mechanism (actually a molecule of magnesium), which allows

a chemical (calcium) to flow between A and B, and a feedback loop is

established. Once established, Neuron A can more efficiently send additional

neurotransmitters across to Neuron B, establishing what is called a “Long

Term Potential” (LTP).

This LTP binds the two neurons together for any future activities that

come along the same path. So, if a child is studying the ABCs, or a musician

is practicing on the piano, or you are learning to play tennis, the brain

can guide visual and aural experiences in a manner that produces a series

of LTPs.

This key process is what our brain does when the circuits in our visual system or auditory system are activated. It is the accumulation of these Long Term Potentials (LTPs) that have been created in either system that makes seeing or hearing a learning process.

Creating visual images

Creating visual images

Let’s consider the process that creates visual images. Visual images

begin with a stream of photons that strike the retina of each eye. There,

a thin layer of neurons and glial cells that line the inside surface

of the eyeball captures and transduces the photons into changes in voltage.

The rods that perform this function translate these messages as black,

white, or shades of gray. The cones are sensitive to different wavelengths

of light. One type responds especially to the long (red) wavelengths,

others to the medium (green) wavelengths, and the third type to short

(blue) wavelengths. A small network of neurons in the retina starts to

analyze this signal and converts its firing rate. The resulting cell

activity signals the brain centers to move our eyes and focus our foveas

(the small, central regions of the retina used to discern details) on

the target.

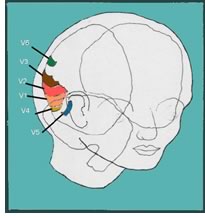

From this point on, the visual signals are transmitted along the optic nerve to the back of the head and then subdivided into multiple pathways. As shown in the diagram above, most of the signals from the left eye go to the right side of the visual cortex, and visa versa. Each of these separate pathways is a parallel process that allows analysis and feedback to be done more quickly than if it were carried out sequentially. The outputs from regions V1 to V5 provide inputs to various regions of the brain’s cortex. For example, the parietal areas deal mainly with where images are in space, and the temporal cortical areas analyze the form of the objects to provide information on what is being seen.

The five specialized areas of the visual cortex are:

The five specialized areas of the visual cortex are:

- V1, which combines all of the inputs

- V2, which produces stereo vision (two images made up of dots)

- V3, which adds depth and distance (a kind of topographic process)

- V4, which overlays color (but not exclusively in this area)

- V5, which “freezes” motion for a split second to allow recognition to take place

- V6, which designates the specific position of object being viewed.

There is still disagreement about whether there are additional areas beyond these six.

You might wonder at this point if there is a place in the brain where all of these inputs and outputs are assembled into one image and projected on to some sort of viewing screen. The answer is no. Neuroscientists call the assembly of these separate bits of information a “binding” process. As indicated earlier, we have a functional activity in the thalamus that sweeps the brain at 700 times per second looking for neurons that are oscillating at 40 hertz. When these are found, they are “bound” together in some way that is not entirely understood. However, we do see one image (if we have normal vision), and we see it in color and in focus—one of the many spectacular abilities of our brains.

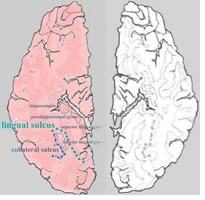

Just in the last few years, it has been discovered that there

is a special area of the brain “tuned” to recognition of buildings.

The drawing shows an axial tissue section of the human brain, showing

the location of the “right ligual sulcus”— the area

specialized for the perception of buildings (as seen from below using

an fMRI brain scan). The green dots are the “voxels” (collections

of neurons) activated for this purpose. One can speculate that we have

acquired this specialized perception to find our way in complex landscapes,

or it may be that our desire for finding and knowing our “home” made

this response persist.

Just in the last few years, it has been discovered that there

is a special area of the brain “tuned” to recognition of buildings.

The drawing shows an axial tissue section of the human brain, showing

the location of the “right ligual sulcus”— the area

specialized for the perception of buildings (as seen from below using

an fMRI brain scan). The green dots are the “voxels” (collections

of neurons) activated for this purpose. One can speculate that we have

acquired this specialized perception to find our way in complex landscapes,

or it may be that our desire for finding and knowing our “home” made

this response persist.

How we hear

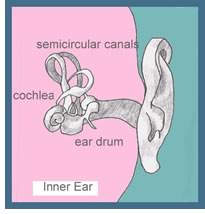

The configuration of the human ear is just one remarkable part of our

auditory system. We are able to hear accurately a crumb drop on the

floor in a quiet room and at the other extreme the roar of a jet engine

or the noise of a rock band. We can discriminate sounds from the deep

bass of an organ—so deep that we can feel it as well—that

is measured at 20 Hz to a high-pitched sound in the range of 20,000

Hz.

Sound waves flow along the inner canal from our outer ears to the eardrum, where they vibrate the three delicate bones of the middle ear. These vibrations are then transmitted to the fluid-filled cochlea (which is partially protected from damaging loud sounds by brain signals to the bones of the inner ear). As was true with vision, the sounds coming into the left ear are primarily transmitted to the right side of the brain, and visa versa. It’s the difference in sound arriving in the auditory cortex from one side and then the other that makes it possible for us to know the direction from which sounds are coming.

The auditory system simultaneously deals with many aspects of sound,

including loudness, pitch, and harmonics, as well as the timing of multiple

sounds and where they are coming from. It does this with a system of

parallel processing, as is true of the visual system. Once a sound has

been processed by both ears, it is sent via the cochlea to the thalamus,

from whence it is relayed to either the A1 or A2 areas of the auditory

cortex. These cortical areas, located in the temporal lobes of the brain,

again form feedback loops to the thalamus so that slight delays in the

signals from each ear can provide bits of information used in discriminating

the direction of the sounds.

The auditory system simultaneously deals with many aspects of sound,

including loudness, pitch, and harmonics, as well as the timing of multiple

sounds and where they are coming from. It does this with a system of

parallel processing, as is true of the visual system. Once a sound has

been processed by both ears, it is sent via the cochlea to the thalamus,

from whence it is relayed to either the A1 or A2 areas of the auditory

cortex. These cortical areas, located in the temporal lobes of the brain,

again form feedback loops to the thalamus so that slight delays in the

signals from each ear can provide bits of information used in discriminating

the direction of the sounds.

One other important function of the auditory cortex is to provide output to the regions of the brain that deal with language. There is a special area known as Broca’s area (after the French neurologist Paul Broca, who discovered this functional area in the 1800s) where speech is formed, and a second area called Wernicke’s area (after Carl Wernicke), where understanding of language occurs. If a person has disease or damage to Broca’s area, they lose the ability to speak; if that person has disease or damage to Wernicke’s area, he or she no longer comprehends the spoken word.

Why architects should know about these matters

Why architects should know about these matters

“Greek architecture does not amaze and overwhelm with mere scale

and complexity; it has vigor, harmony, and refinement that thrill the mind

as well as the eye.”—From Architecture:

From Prehistory to Post-Modernism the Western Tradition, by Marvin Trachtenberg and Isabelle

Hyman.

As I hope you have learned in this article, there is a strong link between our visual ability and our minds. This is especially true of architects and their creations. Most of the design concepts we learn in school and read about in our publications are based entirely on visual material, often photographs.

We have a long history of using our intuitive understanding of the visual appearance of buildings to shape our designs. For example—probably using freehand drawings and empirical guidelines—ancient Greek architects invented entasis, a way of shaping columns to avoid the optical illusion of hollowness that the mind would perceive in normal tapering.

Trachtenberg and Hyman go on to say, “The sublime creation of

High Classic Doric architecture was the Parthenon on the Athenian Acropolis.

The building was erected between 447 and 438 B.C. Its unity of proportion

produced its uncanny harmony. It is

what the Greeks called ‘frozen

music’—a metaphor for celestial harmonies. The

remarkable visual developments in the Parthenon are its optical refinements

that involve variations from the perpendicular and especially from straight

lines. Hardly a single true straight line is to be found in the building.

Historians believe these optical refinements contribute to the visible

grace of the temple and to its vitality.”

Trachtenberg and Hyman go on to say, “The sublime creation of

High Classic Doric architecture was the Parthenon on the Athenian Acropolis.

The building was erected between 447 and 438 B.C. Its unity of proportion

produced its uncanny harmony. It is

what the Greeks called ‘frozen

music’—a metaphor for celestial harmonies. The

remarkable visual developments in the Parthenon are its optical refinements

that involve variations from the perpendicular and especially from straight

lines. Hardly a single true straight line is to be found in the building.

Historians believe these optical refinements contribute to the visible

grace of the temple and to its vitality.”

The Supreme Court building (and hundreds of other courts and banks) used this arrangement to provide classical dignity as perceived by the lay person. In addition to the dispositions we store of buildings we have seen and admired, it seems likely that we all have “filters” in our visual cortex that respond positively to these “optical refinements” and “visible grace.”

Copyright 2006 The American Institute of Architects.

All rights reserved. Home Page ![]()

![]()

Drawings by the author.

For more information, visit the Academy of Neuroscience

for Architecture Web site. ![]()

![]()